Data analytics with real-time data streams has emerged as an imperative for data-driven enterprises such as DocuSign, which manages many global contract collaborations for millions of users. To achieve these outcomes, organizations must establish an agile and scalable foundation to access, analyze and manage real-time and batch data streams shared across multiple data producers and business units to drive key business insights.

The DocuSign Case: Data Governance and Speed of Data Ingestion

Traditionally, this goal has been achieved using data warehousing technologies before powerful analytics tools can be used to distill raw data into impactful insights. The challenge for progressive and fast-growing organizations such as DocuSign comes down to a key limitation: scaling a centralized repository of a growing number of data streams for analytics use cases is complicated, expensive, and prone to data governance and compliance issues.

Consider the case of DocuSign, which previously migrated its data workloads from on-premise data warehouses to a cloud data warehouse. The objective was to quickly gain access to raw data and make it more understandable for its analytics teams. While the technology promised a unified and synthesized view of all data sources, cost-effective analytics and timely insights for business teams remained elusive. As DocuSign continued to expand its data portfolio to extract rich insights into its technology, user base, business operations, and external market conditions, the organization faced several challenges with its existing data platform: limited scalability, expensive resource provisioning for compute-intensive data science application and inadequate compliance controls for effectively segregating data sources with data consumers.

Specifically, DocuSign was planning to support real-time streaming use cases along with the Personally Identifiable Information (PII) data workloads. Since the DocuSign e-signature solution is a SaaS platform, it requires very high compliance with global regulations across various industry verticals. Therefore, the agility to scale analytics and the acceleration of data processing capabilities had to come without compromising the robust data security, privacy, and governance controls.

DocuSign engaged Mactores to address the technology and business challenges, aiming for an advanced, efficient, agile data platform solution. Mactores conducted a detailed assessment and collaboration program as it worked with the company to develop an end-to-end data modernization strategy. Mactores migrated the data workloads on the Mactores Aedeon Data Lake platform. The secure, resilient, and scalable platform enabled modern data lake solutions for various analytics use cases for real-time data streams. The platform prepared DocuSign to rapidly expand its capacity to operate a rich and diverse data portfolio while maximizing security compliance and governance standards for a global user base.

In the coming weeks, DocuSign will achieve 10x agility in its DataOps process and 15x productivity for AI/ML teams by automating 80% of data preprocessing tasks for end-to-end DataOps and MLOps integration.

The Mactores Aedeon Data Lake Solution

Data analytics with real-time streams hold high-value insights that have the potential to fuel innovation and drive competitive strength despite uncertain economic and market conditions. As a market leader in the e-signature space, DocuSign had already embarked on the digital transformation journey of adopting a data warehouse platform but was inherently limited in its ability to store and analyze a growing volume of unstructured data semi-structured data streams in real-time.

The traditional data warehouse architecture caused the following key challenges:

-

Limited scalability as the platform was tightly coupled with the underlying hardware.

-

Data workloads had to be preprocessed and stored in a structured form instead of analyzing information streams in real-time.

-

High operational cost for platform administration, data engineering, and storage.

-

Data assets were maintained in silos due to proprietary formats. The process of accessing, refining, and joining disparate sources of information was complex and expensive yet necessary before running mission-critical analytics use cases.

-

Data storage was under-optimized as the cold (infrequently used) and warm (frequently used) data assets were not sufficiently segregated.

-

Ad-hoc data science use cases that required high scalability of infrastructure resources came at a very high cost. Unlike data analytics use cases where the resource consumption is steady, data science use cases require scalable resource provisioning, significantly increasing data warehouse consumption charges.

-

Data sources and consumers were not adequately segregated. The lack of compliance controls forced manual pre-processing and governance rules, ultimately leading to information silos and anti-democratization of data.

Mactores conducted a 16-week assessment program and multiple workshops to understand DocuSign’s data platform and security requirements. As part of an end-to-end data modernization strategy, Mactores proposed the Aedeon Data Lake solution that guarantees three critical capabilities for a modern data platform: enable, govern, and engage data. By implementing the Aedeon data lake platform, DocuSign could realize real-time production-ready data workloads generated across multiple and disparate information streams in diverse structures and formats. As a result, the entire enterprise could run custom analytics use cases for information-driven business decisions. Mactores realized a production-ready data lake within a few weeks as the technology implementation and data migration was accelerated based on the unique technology and business challenges facing DocuSign.

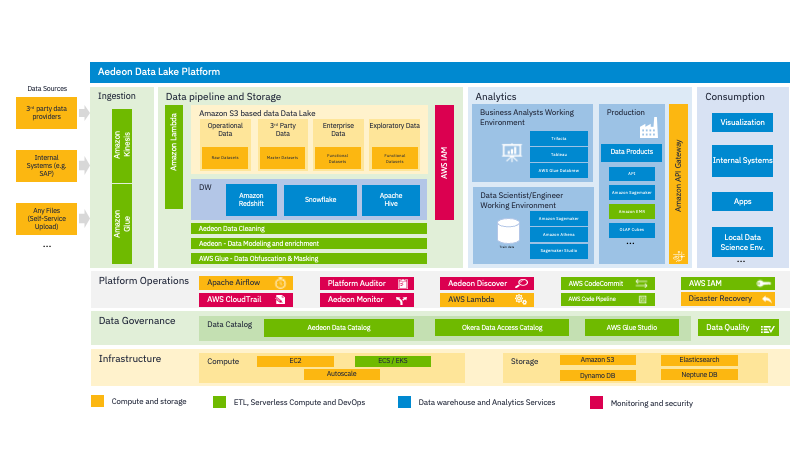

Let’s look closer into the Aedeon Data Lake platform architecture and its features that helped overcome issues facing a traditional data warehouse system.

The Aedeon Data Lake platform stack is described in terms of its functional categories below:

Compute and Storage

-

Support for multiple data sources, including third-party providers, internal business systems, and self-service data uploads.

-

Amazon S3 storage offers a variety of storage tiers based on usage scenarios, pricing, and performance.

-

A highly scalable Amazon ECS infrastructure with Autoscale for rapidly provisioning infrastructure resources.

-

AWS frameworks and platform options such as Amazon Managed Workflow for Apache Airflow, AWS Lambda, Disaster recovery, and a range of Amazon API tools help automate security, monitoring, and provisioning tasks in the data pipeline.

ETL, Serverless Compute, and DevOps

- An event-driven ETL data pipeline serves the critical functionality of a modern data lake. It triggers ETL jobs for real-time data streams as soon as they arrive in the system.

- AWS Glue makes discovering, processing and combining data from disparate sources easy. This makes all information effectively available for real-time analytics within minutes of ingestion.

- Administrative overhead is reduced as AWS Glue offers a standardized visual interface for data integration and ETL tasks. The ETL workflow can be executed without writing the code, engaging fewer data engineering resources. It also automates the process of extracting, cleaning, normalizing, combining, loading, and running ETL workflows.

- Data governance and security management for the data lake are simplified, standardized, and scalable. This was done using the AWS Glue Data Catalog, which controls access to Amazon S3 resources and other AWS API services.

Data Warehouse and Analytics

-

Purpose-built business analytics and machine learning platforms allow users to develop, train and deploy machine learning models with a few simple clicks.

-

Amazon SageMaker supports common use cases in anomaly detection, data extraction and analysis, and customized recommendations.

-

Amazon Athena query service works with the Amazon S3 storage platform without managing the underlying infrastructure.

-

Interactive GUI and integration with internal systems and apps allow business teams to easily build their analytics use cases.

Monitoring and Security

-

Integration with AWS Identity and Access Management (IAM) and data governance tools such as Amazon CloudWatch and AWS CloudTrail ensure continuous security monitoring and automated compliance controls.

-

Advanced tools such as Amazon Macie take the burden away from users by offering a fully managed data security and privacy service. The technology uses advanced AI/ML capabilities to protect the growing volumes of sensitive business and PII data.

With Mactores Machine Learning & Data Lake Foundation Framework, the Aedeon Data Lake is a highly configurable, secure, scalable, automated, multi-account AWS environment aligned with AWS best practices. The data governance and enhanced security are guaranteed as multiple user accounts run analytics workloads on the efficient and cost-effective cloud-based data lake environment.

Specifically focusing on the multi-account strategy, Mactores implemented the following core elements of the Mactores Data Lake Foundation Framework as part of the data platform modernization strategy for DocuSign:

-

Multi-account monitoring

-

Security baseline with preventative and detective controls

-

Identity and access management for multiple teams with SSO-based groups

-

Centralized logging and compliance management

-

Automation with infrastructure as code (IaC)

-

Account Vending Machine and Add-On for environment extension

-

Data Lake Zones with Ingestion Patterns for real-time and batch processing

-

Data Lake Job Monitoring and orchestration using Managed Airflow

The Result: Modern Data Lake for Fast Insights

Mactores was responsible for deploying production architecture and executing production implementation strategy as part of this project. We combined the modern Aedeon Data Lake platform with advanced AWS infrastructure, security and governance, storage, and machine learning capabilities to yield the following business value for DocuSign:

- Fast Access to Data: Access to production-ready data previously required months of manual effort and high cost. The Aedeon Data Lake makes the process essentially automated, and production-ready data is always available.

- Self-Service Actionable Insights: Business teams can develop custom analytics use cases through the simplified visualization and analytics interface.

- Scalable Ingestion: The data lake is designed for pre-processing and preparing data streams at scale as soon as the information is sourced.

- Fully-Managed Data Analytics Platform: Designed to enable, govern and engage, combining proven data engineering processes with industry-proven domain expertise.

- End-to-End Data Management: Advanced tooling to manage data quality, governance, and lifecycle management.

- Faster Insights with Minimal Risk: Self-service preparation and data cataloging made data assets usable for the entire organization with a user-friendly interface and external analytics integrations.

The holistic approach to data platform modernization, combining leading data technologies constituting the Aedeon data lake and Mactores expert consulting services, helped DocuSign unlock unprecedented efficiencies and competitive differentiation with data. The Mactores strategy and Aedeon technology emerged not only as a profit center for DocuSign but as an innovation center, using real-time data insights from a rich information portfolio that now helps scale business and drive innovation for the company.